OS 8.01 - Memory Management

Memory Access and Protection

Main memory and the registers built into each processing core are the only general-purpose storage that the CPU can access directly. If the data are not in memory, they must be moved there before the CPU can operate on them.

Registers that are built into each CPU core are generally accessible within one cycle of the CPU clock. On the other hand, completing a memory access may take many cycles of the CPU clock. In such cases, the processor normally needs to stall, since it does not have the data required to complete the instruction it is executing.

To mitigate this, fast memory, typically on the CPU chip, is added between the CPU and main memory for quick access. This fast memory is called a cache.

Not only are we concerned with the relative speed of accessing physical memory, but we also need to ensure correct operation. To maintain proper system operation, we must protect the operating system from access by user processes and protect user processes from one another. This protection must be provided by the hardware, as the operating system does not typically intervene between the CPU and memory accesses.

Process Separation and Memory Protection

First, we need to ensure that each process has a separate memory space. This separate memory space protects processes from each other and is fundamental for having multiple processes loaded in memory for concurrent execution.

To achieve separate memory spaces, we must have the ability to determine the range of legal addresses a process may access and ensure the process can access only these legal addresses.

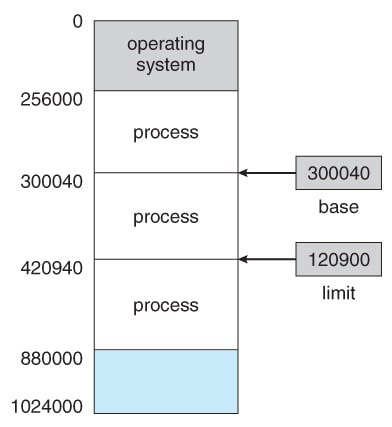

This protection can be provided by using two registers: a base register and a limit register. The base register holds the smallest legal physical memory address, and the limit register specifies the size of the range for logical addresses.

For example, if the base register holds 300040 and the limit register is 120900, the program can legally access all addresses from 300040 through 420939 (inclusive).

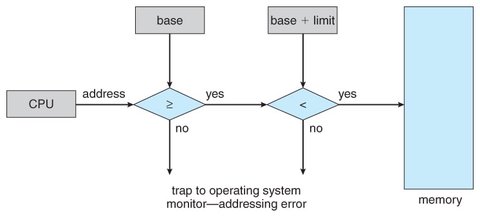

Protection of memory space is achieved by having the CPU hardware compare every address generated in user mode with the values in the registers. Any attempt by a program executing in user mode to access operating-system memory or another user’s memory results in a trap to the operating system, which treats the attempt as a fatal error.

The operating system, executing in kernel mode, is granted unrestricted access to both operating-system memory and user memory.

Memory Protection

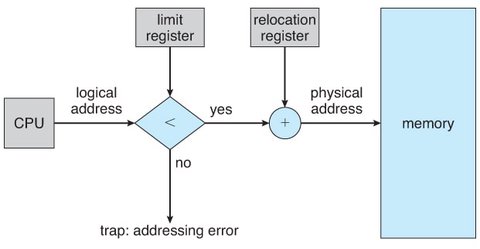

Each logical address must fall within the range specified by the limit register. The Memory Management Unit (MMU) maps the logical address dynamically by adding the value in the relocation register. This mapped address is then sent to memory.

When the CPU scheduler selects a process for execution, the dispatcher loads the relocation and limit registers with the correct values as part of the context switch. Since every address generated by the CPU is checked against these registers, both the operating system and other users’ programs and data are protected from being modified by the running process.

Address Binding

A program resides on disk as a binary executable file. To run, the program must be brought into memory and placed within the context of a process, making it eligible for execution on an available CPU.

When the process terminates, its memory is reclaimed for use by other processes.

Compile Time: If the memory address is known at compile time, absolute code can be generated.

Load Time: If the memory address is not known at compile time, the compiler generates relocatable code, and final binding is delayed until load time.

Execution Time: If the process can be moved during execution from one memory segment to another, binding is delayed until runtime. This requires special hardware and is the method most operating systems use.

Logical vs. Physical Memory

An address generated by the CPU is called a logical address, whereas an address seen by the memory unit (the address loaded into the memory-address register of the memory) is called a physical address.

The set of all logical addresses generated by a program is the logical address space. The set of all physical addresses corresponding to these logical addresses is the physical address space.

The run-time mapping from virtual to physical addresses is done by the Memory Management Unit (MMU). The base register is now referred to as the relocation register. The value in the relocation register is added to every address generated by a user process before the address is sent to memory.

Dynamic Loading

In the past, it was necessary for the entire program and all data of a process to be in physical memory for the process to execute. This limited the size of a process to the size of physical memory. To optimize memory space utilization, we use dynamic loading.

With dynamic loading, a routine is not loaded until it is called. All routines are kept on disk in a relocatable load format. The main program is loaded into memory and executed. When a routine needs to call another routine, the calling routine first checks whether the other routine has been loaded. If it hasn’t, the relocatable linking loader is called to load the desired routine into memory and update the program’s address tables. Control is then passed to the newly loaded routine.

Contiguous Memory Allocation

Memory is typically divided into two partitions: one for the operating system and one for user processes.

In contiguous memory allocation, each process is assigned a single section of memory that is contiguous to the section containing the next process. One of the simplest methods of allocating memory is to assign processes to variably sized partitions in memory, where each partition contains exactly one process.

In this variable-partition scheme, the operating system maintains a table that indicates which parts of memory are available and which are occupied. Initially, all memory is available for user processes and is considered a large block of available memory (a “hole”). As memory is used, it is divided into a set of holes of various sizes.

Variable Partition

When memory blocks are allocated, they comprise a set of holes of various sizes scattered throughout memory. When a process arrives and requires memory, the system searches for a hole large enough to accommodate it. If the hole is too large, it is split into two parts. One part is allocated to the process, and the other is returned to the set of holes.

This procedure is a particular instance of the dynamic storage-allocation problem, which concerns how to satisfy a request of size n from a list of free holes. The most commonly used strategies for selecting a free hole are:

First Fit: Allocate the first hole that is large enough. Searching can start either at the beginning of the set or at the location where the previous search ended. The search stops once a free hole large enough is found.

Best Fit: Allocate the smallest hole that is large enough. This requires searching the entire list unless the list is ordered by size. This strategy minimizes leftover space in the hole.

Worst Fit: Allocate the largest hole. The entire list must be searched unless it is sorted by size. This strategy leaves the largest possible leftover hole, which may be more useful than the smaller leftover hole from a best-fit approach.

Fragmentation

Both the first-fit and best-fit strategies for memory allocation suffer from external fragmentation, where the free memory space is broken into small, scattered pieces. External fragmentation occurs when there is enough total memory space to satisfy a request, but the available spaces are not contiguous.

To address this issue, the physical memory can be divided into fixed-sized blocks, and memory is allocated in units based on block size. This method can result in internal fragmentation, where the memory allocated to a process is slightly larger than the requested memory, leaving unused space inside the partition.

One solution to external fragmentation is compaction, where memory contents are rearranged to create one large block of free memory.

Segmentation

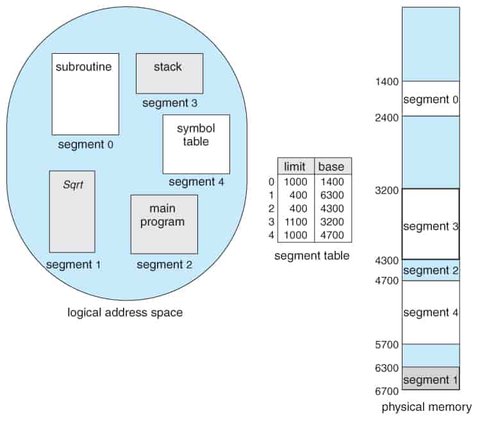

Segmentation is a memory-management scheme that maps the programmer’s view of memory to the actual physical memory. It allows the system to manage memory while providing the programmer with a more natural programming environment.

A programmer typically views memory as a collection of variable-sized segments, each defined by its purpose in the program. These segments might include the main program, methods, procedures, functions, and various data structures (e.g., objects, arrays, stacks, and variables).

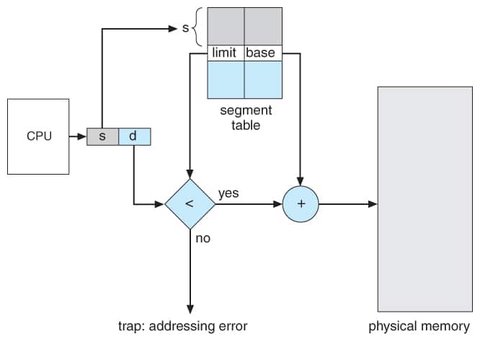

Each segment in a logical address space has a name and a length. The logical address consists of two parts: a segment number and an offset within that segment. The segment number is used as an index to the segment table.

Segmentation Hardware

To map two-dimensional user-defined addresses (segment number and offset) into a one-dimensional physical address, a segment table is used. Each entry in the segment table has a segment base (the starting physical address of the segment in memory) and a segment limit (the length of the segment).

A logical address consists of two parts: a segment number (s) and an offset (d). The segment number is used to index the segment table. The offset d must be between 0 and the segment limit. If the offset is invalid, the system triggers a trap to the operating system. When the offset is valid, it is added to the segment base to produce the physical memory address of the desired byte.