OS 8.02 - Paging

Paging

One solution to the problem of external fragmentation is to allow the logical address space of processes to be noncontiguous. This enables a process to be allocated physical memory wherever it is available. This strategy is used in paging, which is the most common memory-management technique in modern computer systems.

Paging is a memory-management scheme that allows a process’s physical address space to be noncontiguous. It avoids both external fragmentation and the need for compaction—two major issues associated with contiguous memory allocation. Because it offers several advantages, paging in its various forms is widely used in operating systems, from those designed for large servers to those running on mobile devices.

Paging is implemented through a collaboration between the operating system and the computer hardware.

Basic Method of Paging

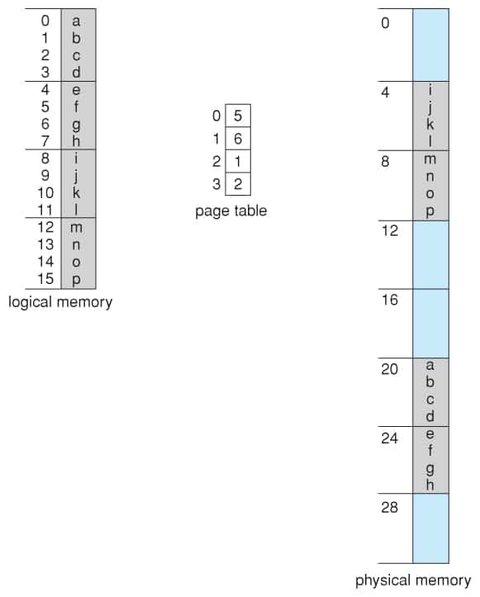

The basic method for implementing paging involves dividing physical memory into fixed-sized blocks called frames, and breaking logical memory into blocks of the same size, called pages.

When a process is executed, its pages are loaded into available memory frames from their source (e.g., a file system or backing store). The backing store is divided into fixed-sized blocks that are the same size as the memory frames, or groups of multiple frames.

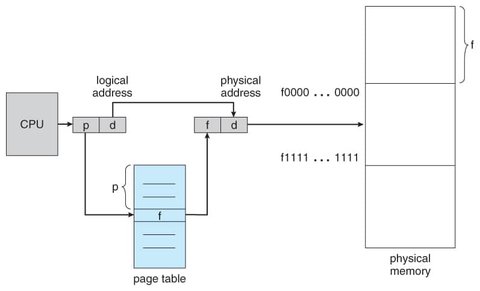

Every address generated by the CPU is divided into two parts: a page number (p) and a page offset (d).

The page number is used as an index into a per-process page table.

The page table contains the base address of each frame in physical memory.

The offset is the location within the frame being referenced.

The base address of the frame is combined with the page offset to define the physical memory address.

The page size (like the frame size) is determined by the hardware. The size of a page is typically a power of 2, ranging from 4 KB to 1 GB, depending on the computer architecture.

When using a paging scheme, there is no external fragmentation: any free frame can be allocated to a process that needs it. However, internal fragmentation may still occur.

Currently, page sizes are typically either 4 KB or 8 KB, although some systems support even larger page sizes. For example, the output of the command getconf PAGESIZE on Unix systems typically returns 4096 bytes, which equals 4 KB.

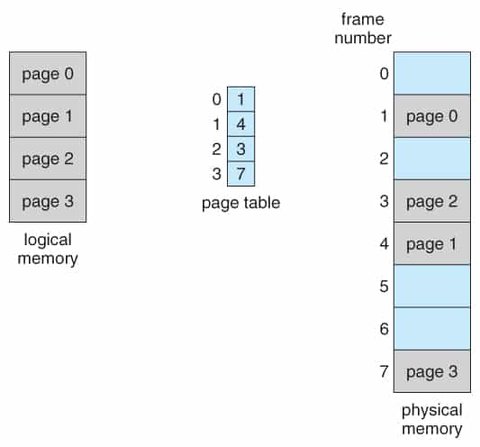

The first page of a process is loaded into one of the allocated frames, and the frame number is placed in the page table for this process. The next page is loaded into another frame, its frame number is added to the page table, and so on.

The programmer views memory as one single space containing only the program. However, the user program is actually scattered throughout physical memory, which may also contain other programs.

By definition, a user process is unable to access memory it does not own. It cannot address memory outside of its page table, which includes only those pages that the process owns.

Allocation details of physical memory—including which frames are allocated, which frames are free, and how many total frames exist—are typically stored in a single, system-wide data structure called a frame table. The frame table contains one entry for each physical page frame, indicating whether it is free or allocated, and if it is allocated, to which page and process (or processes) the frame is assigned.

The operating system maintains a copy of the page table for each process, similar to how it maintains copies of the instruction counter and register contents. This copy is used to translate logical addresses to physical addresses whenever the operating system needs to map a logical address to a physical one.

Hardware Support

Translation Look-Aside Buffer (TLB)

To access location i, the CPU must first index into the page table, using the page number for i and combining it with the frame number to produce the actual address and accessing desired place in memory, thus requiring two memory accesses.

The standard solution to this problem is the Translation Look-Aside Buffer (TLB), a special, small, fast-lookup hardware cache. The TLB is associative high-speed memory. Each entry in the TLB consists of two parts: a key (or tag) and a value. When an item is presented to the associative memory, it is compared with all keys simultaneously. If a match is found, the corresponding value is returned.

The search in the TLB is fast, as a TLB lookup in modern hardware is part of the instruction pipeline, essentially adding no performance penalty.

The TLB contains only a few page-table entries. When the CPU generates a logical address, the Memory Management Unit (MMU) first checks if its page number is in the TLB. If the page number is found, the corresponding frame number is used to access memory immediately.

If the page number is not in the TLB (a TLB miss), the address translation continues by referring to the page table. The frame number is then obtained and used to access memory. The page number and frame number are then added to the TLB, so they can be quickly found on the next reference.

Some TLBs allow certain entries to be “wired down,” meaning they cannot be removed from the TLB. Typically, TLB entries for critical kernel code are wired down.

The percentage of times that the page number of interest is found in the TLB is called the hit ratio. For example, an 80% hit ratio means the desired page number is found in the TLB 80% of the time.

Paging Memory Protection

Memory protection in a paged environment is accomplished through protection bits associated with each frame. These bits are typically stored in the page table.

One additional bit, called the valid-invalid bit, is generally attached to each page table entry.

When the bit is set to valid, the page is part of the process’s logical address space and is therefore a legal (valid) page.

When the bit is set to invalid, the page is not part of the process’s logical address space, and illegal address references are trapped using the valid-invalid bit.

The operating system sets this bit for each page to allow or disallow access to the page. If a page’s valid-invalid bit is set to invalid, the computer traps to the operating system in case of an invalid page reference.

Swapping

In order for process instructions and data to be executed, they must be in memory. However, a process, or a portion of it, can be temporarily swapped out of memory to a backing store and then brought back into memory for continued execution. Swapping makes it possible for the total physical address space of all processes to exceed the system’s real physical memory, increasing the degree of multiprogramming.

Most modern systems, including Linux and Windows, use a variation of swapping where individual pages of a process (rather than the entire process) are swapped. This allows physical memory to be oversubscribed without incurring the cost of swapping entire processes, as typically only a small number of pages will be involved in the swap.

Unit Summary

Memory is a fundamental component of modern computer systems. It consists of a large array of bytes, each with a unique address, enabling the CPU to access data and instructions efficiently.

One way to allocate memory to each process is through base and limit registers. The base register holds the smallest legal physical memory address, while the limit register specifies the size of the address range allocated to the process. These registers help ensure that processes cannot access memory outside their allocated space, preventing memory errors and protecting other processes’ data.

Binding symbolic address references to actual physical addresses can occur at three different stages of program execution: (1) compile time, (2) load time, and (3) execution time. The binding process involves mapping logical addresses used by the program to physical addresses in memory.

An address generated by the CPU is referred to as a logical address (or virtual address). The Memory Management Unit (MMU) is responsible for translating this logical address to a corresponding physical address in the computer’s memory.

One traditional approach to memory allocation is to divide the physical memory into contiguous partitions of varying sizes. These partitions are allocated to processes based on one of three strategies: (1) first fit, where the first available partition is chosen, (2) best fit, where the smallest partition that fits is selected, and (3) worst fit, where the largest available partition is used.

Modern operating systems use paging to manage memory efficiently. In this scheme, physical memory is divided into fixed-sized blocks called frames, and logical memory is divided into blocks of the same size, called pages. This method eliminates external fragmentation and simplifies memory management.

When paging is used, a logical address is divided into two parts: a page number and a page offset. The page number acts as an index into a per-process page table, which contains the physical memory frame that holds the corresponding page. The page offset indicates the specific location within the frame. This allows the system to efficiently locate and access data in memory.

A Translation Look-Aside Buffer (TLB) is a hardware cache that stores a subset of the entries from the page table. Each entry in the TLB contains a page number and its corresponding frame number. The TLB speeds up address translation by reducing the need to access the page table for every memory reference.

When the CPU generates a logical address, the MMU first checks the TLB to see if the page number is present. If the page number is found in the TLB (a TLB hit), the corresponding frame is quickly retrieved. If the page number is not in the TLB (a TLB miss), the system must access the page table to retrieve the frame, which may take more time. After the frame is found, it is also added to the TLB for faster access in the future.

Swapping is a technique that allows the operating system to move pages of a process between main memory and secondary storage (such as a disk) to optimize memory usage and increase the degree of multiprogramming. By swapping pages in and out of memory, the system can run more processes simultaneously, even if the total memory required exceeds the available physical memory. This technique is essential for systems with limited memory resources.