OS - Unit-4 - Memory Management Answered

Memory Protection and Address Space

- What do you mean by memory protection? Elaborate on the facts of memory protection with proper justifications.

Answer :

Memory protection ensures one process cannot access the memory space of another process or the operating system. It provides isolation between processes and between user processes and system-level processes, thereby maintaining the integrity and security of data.

Memory protection helps keep the system safe and stable by:

Blocking malicious or faulty programs from accessing other processes’ or the operating system’s memory.

Preventing programs from accidentally overwriting important data. Detecting illegal memory access and stopping the faulty process before it can harm the system.

Stopping processes from interfering with each other, which could cause crashes or unpredictable behavior.

Allowing only the operating system (running in kernel mode) to access protected memory areas.

Helping the operating system manage memory safely and share it properly between processes.

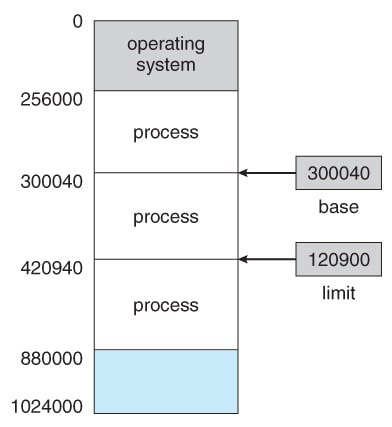

To implement memory protection, hardware support is required to determine the range of legal addresses a process may access. This is typically provided through two special-purpose registers:

Base Register: Contains the starting physical address where a process’s memory segment begins.

Limit Register: Specifies the size of the memory segment range allocated to the process.

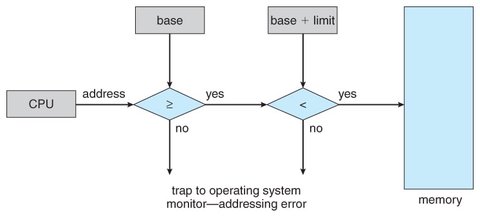

When a process attempts to access memory, the CPU hardware compares and checks every address generated by the user process falls within the range defined by the base and limit registers:

Legal Address: If the address is greater than or equal to the base and less than the base plus the limit, the access is allowed.

Illegal Address: If the address is outside this range, it is trying to access memory of other process. CPU raises an exception (trap), and control is transferred to the operating system, which then handles the error, usually by terminating the offending process.

Example:

If the base register holds the value 300040 and the limit register is 120900, the process can legally access addresses from 300040 to 420939. Any access outside this range will cause a trap.

The Memory Management Unit (MMU) dynamically maps logical addresses (used by programs) to physical addresses (used by hardware) using the values stored in the base and limit registers.

When a process is scheduled for execution:

The dispatcher (part of the CPU scheduler) loads the process’s base and limit register values.

Each address generated by the process is checked against these values before being allowed to access physical memory.

This hardware-level enforcement guarantees that no process can go beyond its allocated memory range.

Logical and Physical Address Space

- Distinguish between: Logical vs Physical address space.

Answer :

A logical address is the address the program uses to access memory, and it is generated by the CPU.

A physical address is the actual location in RAM where the data or instruction resides.

The logical address space is the set of addresses a process can use, while the physical address space is the set of addresses available in the system’s physical memory.

The Memory Management Unit (MMU) performs the translation from logical to physical addresses, ensuring memory protection and efficient utilization.

Logical Address Space

A logical address (also called a virtual address) is the address generated by the CPU during the execution of a program. This address is used by a process to refer to memory locations. However, it does not directly correspond to an actual location in physical memory.

The logical address space is the set of all logical addresses that a process can use. From the program’s point of view, it appears as if it has access to a large, continuous block of memory starting from address zero. This abstraction makes it easier for developers to write programs without worrying about the actual location of the program in memory.

Physical Address Space

The physical address space is the set of all physical addresses that can be used to store and retrieve data in the main memory. Physical addresses are determined by the system’s memory allocation mechanisms.

A physical address refers to a specific location in the physical memory (RAM). It is the actual address that is used to access data in the hardware memory and page frames allocated to a process may not be contiguous in physical memory.

Address Translation

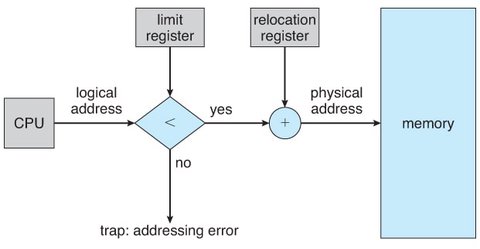

Logical addresses generated by a process are translated to physical addresses by a component called the Memory Management Unit (MMU). The user process never deals with physical addresses directly.

- The base register (also called the relocation register) contains the starting physical address of a process.

- The limit register defines the range of accessible addresses.

The logical address generated by the process is added to the base address to produce the physical address. If the result exceeds the limit, the operating system raises an error, preventing access to unauthorized memory.

Address Binding and Dynamic Loading

- Illustrate the facts and concepts for the following terms: Address Binding. Dynamic Loading.

Answer :

Address Binding refers to the process of mapping program instructions, variables and data to memory addresses. It can occur at compile time, load time, or execution time.

Compile-Time Binding : If the memory location where the program will reside is known in advance (at the time of compilation), the compiler generates absolute code with actual physical addresses.

Load-Time Binding : If it is not known at compile time where the program will be loaded in memory, the compiler generates relocatable code. The final physical addresses are determined when the program is loaded into memory. The operating system calculates the starting address (base address) at load time and adjusts all addresses accordingly.

Execution-Time Binding : If a process can move between different memory locations during execution, address binding is done at runtime. This is the most flexible form of binding and is supported by modern operating systems using hardware such as the Memory Management Unit (MMU). Here, logical addresses are generated by the program and are mapped to physical addresses during execution.

Address binding is crucial for memory protection, process isolation, and efficient memory utilization in multitasking environments.

Dynamic loading is a technique used to optimize memory usage by loading code into memory only when it is actually needed during execution, rather than loading the entire program at once.

In traditional systems, the complete program—along with all its subroutines—would be loaded into memory before execution. This could waste memory, especially if some functions are never used.

With dynamic loading:

- Only the main program is loaded into memory initially.

- Other routines (functions or modules) are stored on disk in a relocatable format.

- When the main program calls a routine that is not yet in memory, the system checks whether it has already been loaded.

- If not, a relocatable linking loader loads the routine into memory, updates the address references, and then transfers control to that routine.

This method reduces memory consumption and allows programs to run even if the physical memory is limited, as only the necessary parts are loaded on demand.

Memory Allocation Strategies

- Distinguish between: First fit and Best fit algorithms.

- Discuss first-fit, best-fit, and worst-fit strategies of dynamic memory allocation.

- Explain the strategies for selecting the free hole.

Answer :

In contiguous memory allocation, each process is assigned a single continuous block of memory. When multiple processes are created and terminated dynamically, memory gets divided into variable-sized blocks called holes scattered in memory, which can be reused by other processes.

To manage memory efficiently, the operating system uses allocation strategies to determine how to assign free memory blocks to new processes requesting for memory.

The most common strategies are First Fit, Best Fit, and Worst Fit

First Fit : The memory is scanned from the beginning(or from where the previous search ended), and the first hole that is large enough to accommodate the process is selected.

Fast, but May lead to many small, unusable holes near the beginning of memory (external fragmentation).

Best Fit : The system searches all holes and chooses the smallest hole that is large enough for the process.

Leaves the least leftover space, reducing internal fragmentation but Slower due to the need to search the entire memory list. May also create many small holes that can’t be used.

Worst Fit : The system searches all holes and assigns the process to the largest hole available.

Leaves the largest leftover hole, possibly allowing larger future allocations. But it requires searching the entire list and may waste large chunks of memory inefficiently.

Example Memory Allocation

- Given five memory partitions of 100KB, 500KB, 200KB, 300KB, and 600KB (in order). How would each of the first-fit, best-fit, and worst-fit algorithms place processes of 212KB, 417KB, 112KB, and 426KB (in order)? Which algorithm uses the memory efficiently?

Answer :

Memory Partitions (in KB):

100 KB, 500 KB, 200 KB, 300 KB, 600 KB

Processes (in KB):

212 KB, 417 KB, 112 KB, 426 KB

First Fit Allocation:

- 212 KB → Allocated to 500 KB block → Remaining: 288 KB

- 417 KB → Allocated to 600 KB block → Remaining: 183 KB

- 112 KB → Allocated to 200 KB block → Remaining: 88 KB

- 426 KB → No block large enough is available

Resulting Memory Blocks:

- 100 KB (unused)

- 288 KB (from original 500 KB)

- 88 KB (from 200 KB)

- 300 KB (unused)

- 183 KB (from 600 KB)

Best Fit Allocation:

- 212 KB → Allocated to 300 KB block → Remaining: 88 KB

- 417 KB → Allocated to 500 KB block → Remaining: 83 KB

- 112 KB → Allocated to 200 KB block → Remaining: 88 KB

- 426 KB → Allocated to 600 KB block → Remaining: 174 KB

Resulting Memory Blocks:

- 100 KB (unused)

- 83 KB (from 500 KB)

- 88 KB (from 200 KB)

- 88 KB (from 300 KB)

- 174 KB (from 600 KB)

Worst Fit Allocation:

- 212 KB → Allocated to 600 KB block → Remaining: 388 KB

- 417 KB → Allocated to 500 KB block → Remaining: 83 KB

- 112 KB → Allocated to 388 KB block → Remaining: 276 KB

- 426 KB → No block large enough is available

Resulting Memory Blocks:

- 100 KB (unused)

- 83 KB (from 500 KB)

- 200 KB (unused)

- 300 KB (unused)

- 276 KB (from 600 KB)

- Best Fit successfully allocates all four processes.

- First Fit and Worst Fit fail to allocate the last process (426 KB).

- Best Fit makes the most efficient use of memory in this case by minimizing wasted space and maximizing the number of processes allocated.

Fragmentation

- Distinguish between internal fragmentation and external fragmentation. How do you overcome the problem of both?

- Write short notes on the following: Fragmentation.

Answer :

Fragmentation is a condition in memory management where free memory is broken into pieces, resulting in inefficient memory usage. It affects how memory is allocated to processes and can reduce overall system performance and memory utilization.

Internal Fragmentation occurs when memory is allocated in fixed-sized memory allocation (partitions), and a process does not use the entire block. The unused portion within the allocated memory block is wasted.

Example:

- If a process needs 28 KB and the system allocates a 32 KB block, 4 KB is left unused. This unused memory within the block is internal fragmentation.

Solution:

- Use smaller-sized blocks to minimize waste.

- Use variable-sized partitions (as in dynamic memory allocation) when possible.

- Employ paging with smaller page sizes to reduce internal waste.

External fragmentation happens when free memory is divided into many small, non-contiguous blocks scattered across the memory space (Variable-sized memory allocation, first-fit, best-fit). Even if the total free memory is sufficient for a process, the lack of a single large contiguous block prevents allocation.

Example:

- A process of 200 KB cannot be allocated even though the total free space is 300 KB, because it is scattered in blocks of 100 KB, 80 KB, and 120 KB.

Solution:

- Compaction: Rearranging memory contents to bring free memory blocks together into one large block.

- Using paging or segmentation, which allow non-contiguous memory allocation and avoid external fragmentation.

Segmentation

- What is Segmentation? Illustrate it with a neat sketch.

- With a neat diagram, explain the concept of segmentation. What are the advantages of segmentation?

Answer :

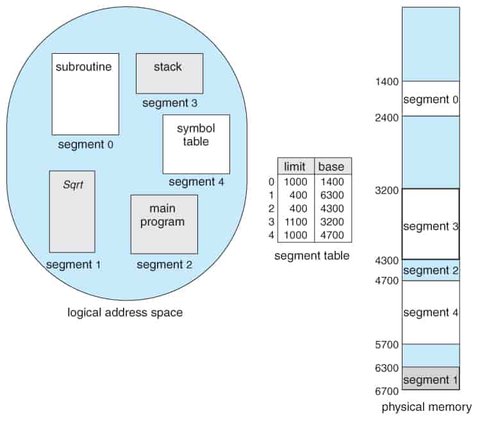

Segmentation is a memory management technique that divides a program’s memory into variable-sized segments based on the logical divisions in the program, such as functions, data structures, arrays, stacks, and the main program. Unlike paging, which divides memory into fixed-size blocks, segmentation uses blocks of varying sizes that reflect how a programmer logically organizes code and data.

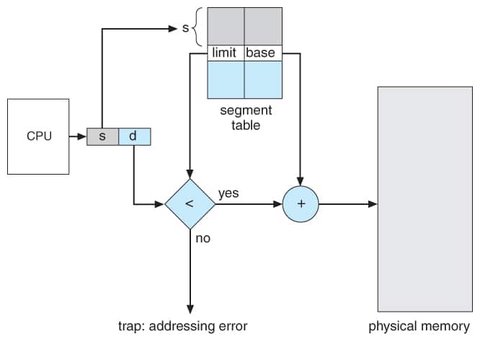

Each segment in logical address space is identified by a segment number and accessed through an offset within that segment.

- Segment Number (s) – identifies which segment to access (Used as an index to the segment table).

- Offset (d) – the location within the segment table.

The system maintains a segment table for each process. Each entry in the segment table contains:

- Base: The starting physical address of the segment in memory.

- Limit: The length of the segment (i.e., the maximum legal offset).

To access a memory location:

- The CPU generates a logical address (segment number and offset).

- The segment number is used to index into the segment table.

- The offset is checked against the segment’s limit to ensure it is valid. Offset must be between 0 and segment limit.

- If valid, the offset is added to the segment’s base address to compute the physical address of that data.

If the offset is greater than or equal to the limit, a trap (memory access violation) is raised by the hardware.